I was looking at code.golf the other day and I wondered which languages were the least verbose, so I did a little data gathering.

I looked at 48 different languages that had completed 79 different code challenges on code.golf. I then gathered the results for each language and challenge. If a “golfer” had more than 1 submission to a challenge, I grabbed the most recent one. I then dropped the top 5% and bottom 5% to hopefully mitigate most outliers. Then came up with an average for each language, for each challenge. I then averaged the results across each language and that is what you see here.

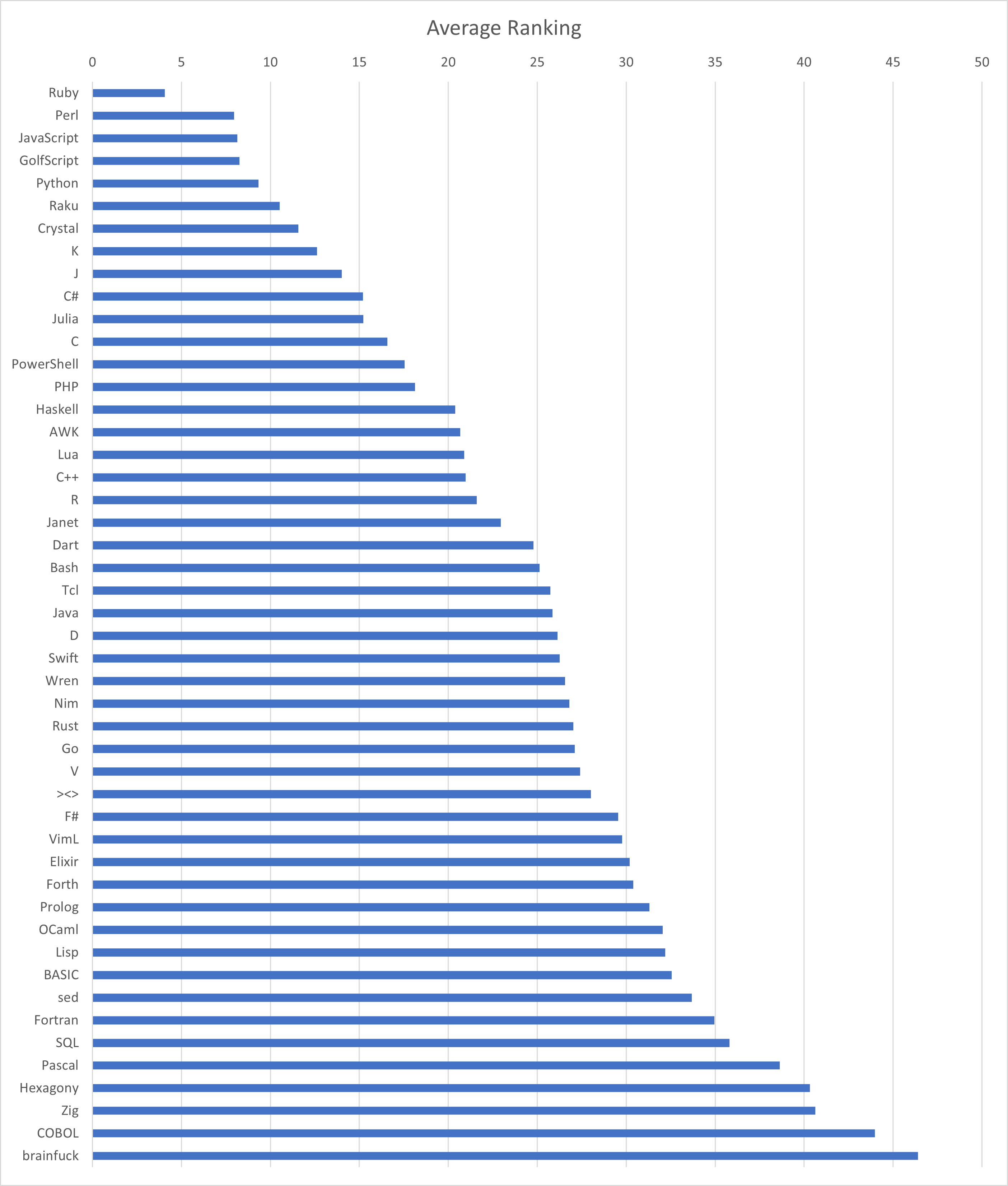

For another perspective, I ranked each challenge then got the average ranking across all challenges. Below is the results of that.

Disclaimer: This is in no way scientific. It’s just for fun. If you know of a better way to sort these results please let me know.

But a big problem with this dataset is error handling - or really the complete lack thereof. Real code needs to deal with errors and they can add a lot depending on the language.

I was very surprised to see rust and go so close as I find go vastly more verbose due to error handling and need to reimplement things like searching a list. But code golf type problems ignore these types of things that you see in real code.

So there is not really and useful conclusion that can be made except if you spend all day writing code golf problems.

That’s true, and you can also combine multiple errors to have a single catch block or handle each error separately. The perfect dataset for this comparison will need to be written. Code golf data is good enough for a non-academic fun analysis like this one.