deleted by creator

- 9 Posts

- 92 Comments

Thanks. That’s a good ELI5. Fortunately I managed to make sense of it before your reply but the link to environment variables is highly appreciated. As I already replied to someone else, I had no idea PATH was a global/environment variable and just assumed it’s telling me to specify path so I had no idea I need to RTFM as it confused me greatly and on top of that I did another mistake which confused me even more when I finally managed to get it to do correctly which made me think I am doing it wrong.

I gave up at CMAKE finally as I really need to RTFM more on that as it started to throw many errors at me.

I’m fine with RTFM but I had no idea PATH is a global variable and I assumed it’s telling me to specify path to something. So I had no idea I need to RTFM because I did not RTFM to understand that I need to RTFM. After the first reply pointed me the right direction I managed to make sense of it. Though english is not my first language and some of the terms are just over my head so I had to RTFM with dictionary which took a lot of time for me to finally understand because I was doing another thing wrong on top of that which specifically was

I had to do ~/SCALE_PATH instead of the confusing example of $(SCALE_PATH} as trying nvcc --version did absolutely nothing even though the path was correct

I’ve been on Linux since April so I’ve stumbled a lot but got many things to work, it just takes me a lot of time to get trough it and I’ve really stumbled on this one. Getting ROCM to work was a breeze and most recently getting PyTorch with ROCM to work for AI generative models on AMD. I’ve also finally started to tinker with toolbox a lot more and finally understand the benefits of it.

Well, I progressed quite a bit and learned a lot more than I knew until now but I give up. This is way over my head, I’ll stick to using ROCM for now. Thanks for pointing me in the right direction at least.

Thanks, I’m still not sure I completely understand but I think this iis how it’s supposed to be

Manjaro and Ubuntu surprised me how bad they are

Thanks!

1.) will definitely give it a try 3.) I have set the amdgpu feature mask otherwise I wouldn’t even have access to the power limit, voltages, etc… but VRAM overclocking just does not work. Everything else seems to work fine.

I’m 100% sure it’s not a cable issue for many different valid reasons one of the main ones being that the cable is able to drive higher res monitor at higher refresh rate without issue.

Also if I just swap cables from my main monitor with the 2nd the same issue still happens with the 2nd monitor but only in Linux, never Windows.

4·3 months ago

4·3 months agoNo problem! I was interested in the performance so may as well share my findings :)

Also important to note. On Windows the game runs Lumen all the time on DX12 and only way to disable it is to run the game on DX11. I’m assuming on Vulkan via Proton the game does not run Lumen at all. And also the game seems to support Frame Generation but I haven’t tried it yet.

Please bear in mind that custom tuning isn’t a guarantee between different driver versions; the voltage floor can shift with power management firmware changes delivered driver packages (this doesn’t overwrite the board VBIOS, it’s loaded in at OS runtime (pmfw is also included in linux-firmware)). I’d recommend testing with vulkan memory test with each Adrenalin update, and every now and then on Fedora too.

I’m aware. For now it seems to behave consistently. I observed higher avg clocks on Linux vs Windows with the same OC but then again it may be due to difference in monitoring SW or just polling rate.

To be fair when it’s time to upgrade the Linux support will be probably even worse since I would be upgrading to even newer stuff than what I have now.

2·3 months ago

2·3 months agoI would hold on the conclusion for now. Steve from HW Unboxed tested both Zen 4 and Zen 5 with the “supposed” fix and both had improved performance so the rough difference between Zen 4 and Zen 5 remained almost the same as the issue was affecting both. We will need to see more tests though to draw a reasonable conclusion. We don’t yet know if this also affects older Zen 3 at all or not.

The monitors being flipped happened as well. I fixed that by flipping the DP cable order on the graphics card.

1.) IDK, this issue tends to manifest for me with different distros as well sometimes. Forgot to mention that it also happens if monitors go to sleep when inactive and on wake up the 2nd screen sometimes does not wake. That’s why I disabled sleep for monitors.

2.) So far works fine after disabling HW acceleration

4.) no need to waste both of ours time. The script now works fine but thanks for offer. I don’t even know what half of your sentence means :D

3.) On Windows I use MPT to further modify the cards behaviour like SOC voltages, clock, FCLK clock, TDC limits and power limits, etc… Basically I can easily squeeze 10% on top of the typical overclocking available via MSI Afterburner or AMD Adrenaline SW. #73rd place in TimeSpy for GPU score which is kinda ridiculous for air cooled card

Cyberpunk likes to draw a lot of current so my tweaks help alleviate the throttling caused by it in typical OC scenarios as the card hits current limit in the CP2077 benchmark more often than it hits the actual power limit. That’s why the lows on Linux are worse, it’s not related to CPU underperforming. I’d suspect that the lows would be actually better if I could uncap GPU TDC current limit on Linux. Averages would be likely still lower vs Windows due to lack of VRAM OC.

This is not really comparable and I would have to do proper test on both Windows and Linux with the same versions of the game but I’ve tested with the same settings which are FOV = 100, SSR = Low (because it performs like crap on higher settings for no visual benefit), everything else maxed out.

This is screenshot from the run I did in February with my Windows OC… (also had worse CPU and memory tune back then vs what I run now so results would be slightly better now as well).

And this is from right now on Fedora 40… not sure why CP detects it as Windows 10

Would be interesting to keep the same game versions and GPU,CPU,DRAM tune and do a direct comparison but I can’t really be bothered right now to mess with that. What’s important to me that it’s roughly in the same ballpark and there are no massive swings in performance so unless I keep a close eye on monitoring I can hardly tell a difference when playing.

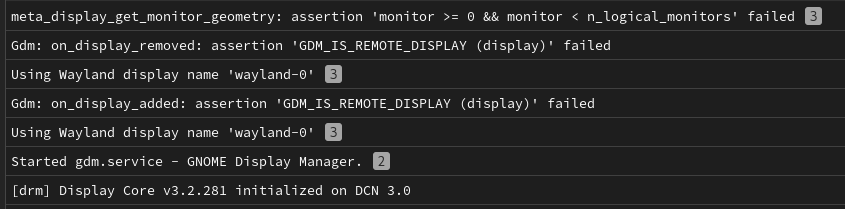

Yes, I’m using the FOSS drivers. I’ll try to look at the logs next time it happens

RX 6800 XT

1.) I don’t think it’s a driver issue. For some reason the display just does not get picked up during boot. The system still behaves like there are 2 monitors connected though.

2.) Tried disabling HW acceleration in Steam, so far so good but haven’t used it for long enough to see if it’s completely fine.

3.) AMD changes VRAM timings with clock, it’s not just simple clock change, thats why also negative offset affects VRAM stability. I don’t think that CoreCTRL compensates for this with it’s VRAM tune.

Thanks a lot. This is actually quite simple and I’ve overcomplicated things for no reason. Also fully tested and working as I just got a kernel update.

I’ve understood your fist comment as if I needed two separate scripts files and one calls upon the other…

I wouldn’t call this a specialized setup. VRR has been there for almost 15years. I’ve dealt with VRR issues on Windows and they are still preset in some instances with mixed non-VRR and VRR monitor setups they just manifest differently on Linux due to different compositor. My experience last year on KDE 5 was fine in games and it was on desktop where VRR was causing me strange issues. Now I don’t have any issues on GNOME despite it being just and experimental feature still.

OC wise, yes. This is kinda niche and I’m glad that it at least works to some extent. I can squeeze more performance out of my GPU on Windows but even the SW that allows me to do it is not well known and very niche and finicky on Windows and for typical OC everything works fine on Linux except VRAM OC.

Weird mice… yes. After setting up my mouse on Windows I’ve saved my profile to the on-board memory and uninstalled that crappy SW so it’s not required for me to have my mouse usable.

HDR… I don’t really care about it right now. I’ve tried it and I still find SDR to look “better” in my subjective opinion.

I really have to disagree with your take that I would have to limit myself to “keep it simple” to use Linux since nothing is guaranteed and you will sooner or later run into some weird issue because of your setup despite thinking it’s “common enough”. Most of these requirements are not even weird in the slightest. And yes, I do appreciate the work that has been and is being done.

I’m upgrading ASAP just for ROCM 6.2 because almost nothing seems to work ever since I’ve updated to 6.1.2 for some reason